Anyone who has looked at the installation requirements for an OpenShift Baremetal IPI installation knows that a provisioning node is required for the deployment process. This node could potentially be another physical server or a virtual machine, either way though it needs to be a node running Red Hat Enterprise Linux 8. The most common example is where a customer would just use one of their clusters physical nodes, install RHEL 8 on it, deploy OpenShift and then reincorporate that node into the newly built cluster as a worker. I myself have personally used a provisioning node that is virtualized on kvm/libvirt with RHEL 8 host. In this example the deployment process, specifically the bootstrap virtual machine, is then nested. With that said though I am seeing a lot of requests from customers that want to leverage a virtual machine in VMware to handle the provisioning duties, especially since after the provisioning process, there really is not a need to keep that node around.

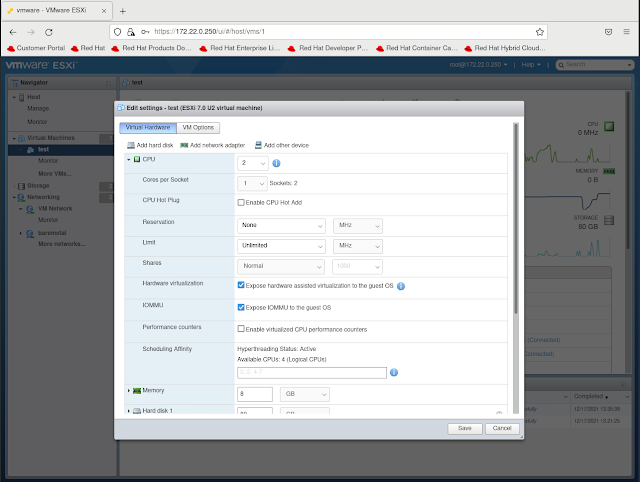

While it is entirely possible to use a VMware virtual machine as the provisioning node there are some specific things that need to be configured to ensure that the nested bootstrap virtual machine can launch properly and obtain the correct networking to function and deploy the OpenShift cluster. The following attempts to highlight those requirements without providing a step by step installation guide since I have written about the OpenShift BM IPI process many times before.

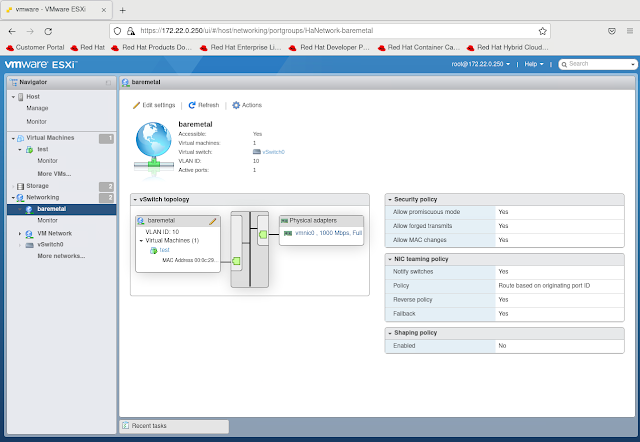

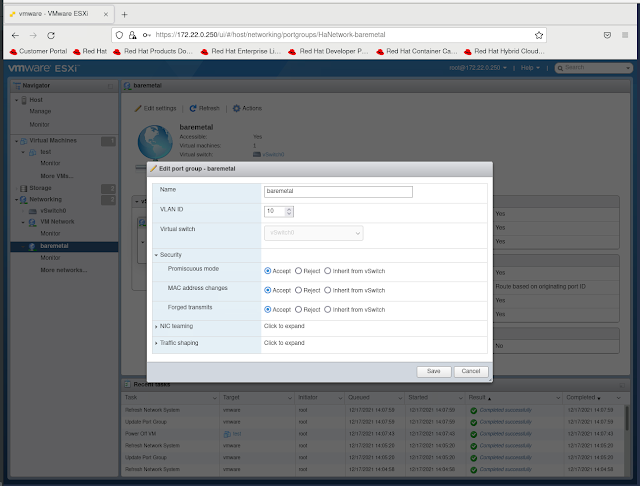

First lets quickly take a look at the architecture of the provisioning virtual machine on VMware. The following figure show a simple ESXi 7.x host (Intel NUC) with a single interface into it that has multiple trunked vlans from a Cisco 3750.

From the Cisco 3750 we can see the switch port is configured to allow the trunking of the two vlans we will need to be present on the provisioning virtual machine running on the ESXi hypervisor host. The first vlan is vlan 40 which is the provisioning network used for PXE booting the cluster nodes. Note that this vlan needs to also be our native vlan because PXE does not know about vlan tags. The second vlan is vlan 10 which provides access for the baremetal network and for this one it can be tagged as such. Other vlans are trunked to these ports but they are not needed for this particular configuration and are only there for flexibility when I create virtual machines for other lab testing.

! interface GigabitEthernet1/0/6 switchport trunk encapsulation dot1q switchport trunk native vlan 40 switchport trunk allowed vlan 10,20,30,40,50 switchport mode trunk spanning-tree portfast trunk !

$ virt-host-validate QEMU: Checking for hardware virtualization : PASS QEMU: Checking if device /dev/kvm exists : PASS QEMU: Checking if device /dev/kvm is accessible : PASS QEMU: Checking if device /dev/vhost-net exists : PASS QEMU: Checking if device /dev/net/tun exists : PASS QEMU: Checking for cgroup 'cpu' controller support : PASS QEMU: Checking for cgroup 'cpuacct' controller support : PASS QEMU: Checking for cgroup 'cpuset' controller support : PASS QEMU: Checking for cgroup 'memory' controller support : PASS QEMU: Checking for cgroup 'devices' controller support : PASS QEMU: Checking for cgroup 'blkio' controller support : PASS QEMU: Checking for device assignment IOMMU support : WARN (No ACPI DMAR table found, IOMMU either disabled in BIOS or not supported by this hardware platform) QEMU: Checking for secure guest support : WARN (Unknown if this platform has Secure Guest support)