I see a lot of customers asking about being able to deploy an OpenShift Baremetal IPI lab or proof of concepts in VmWare. Many want to do it to try out the deployment method without having to invest in the physical hardware. The problem faced with VmWare is the lack of an Intelligent Platform Management Interface (IPMI) for the virtual machines. I am not knocking VmWare either in this case because they do offer a robust API via Vcenter that lets one do quite a bit via scripting for automation. However the OpenShift Baremetal IPI install process requires IPMI or RedFish which are standards on server hardware. There does exist though a project that can possibly fill this gap though but it should only be used for labs and proof of concepts not production.

The project that solves this issue is called virtualbmc-for-vsphere. If the name virtualbmc sounds familiar its because that project was originally designed to provide IPMI to KVM virtual machines. However this forked version of virtualbmc-for-vsphere uses the same concepts to provide an IPMI interface for VmWare virtual machines. Only the code knows how to talk to Vcenter to power on/of and set bootdevices of the virtual machines. Here are some example of what IPMI commands are supported:

# Power the virtual machine on, off, graceful off, reset, and NMI. Note that NMI is currently experimental

ipmitool -I lanplus -U admin -P password -H 192.168.0.1 -p 6230 power on|off|soft|reset|diag

# Check the power status

ipmitool -I lanplus -U admin -P password -H 192.168.0.1 -p 6230 power status

# Set the boot device to network, disk or cdrom

ipmitool -I lanplus -U admin -P password -H 192.168.0.1 -p 6230 chassis bootdev pxe|disk|cdrom

# Get the current boot device

ipmitool -I lanplus -U admin -P password -H 192.168.0.1 -p 6230 chassis bootparam get 5

# Get the channel info. Note that its output is always a dummy, not actual information.

ipmitool -I lanplus -U admin -P password -H 192.168.0.1 -p 6230 channel info

# Get the network info. Note that its output is always a dummy, not actual information.

ipmitool -I lanplus -U admin -P password -H 192.168.0.1 -p 6230 lan print 1

From the commands above it looks like we get all the bits that are required from an IPMI standpoint when doing a OpenShift BareMetal IPI deployment.

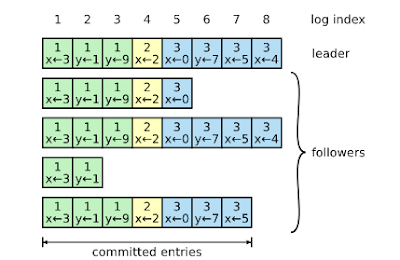

Before I proceed to show how to setup virtualbmc-for-vsphere lets quick look at our test virtual machine within Vcenter (vcenter.schmaustech.com). From the picture below we can see that there is a virtual machine called rheltest which is currently powered on and has an ipaddress of 192.168.0.226. Once we get virtualbmc-for-vsphere configured we will use IPMI commands to power down the host and then power it back up.

Now that we have familiarized ourself with the VmWare environment lets take a moment to setup virtualbmc-for-vsphere. There are one of two methods for installation: using pip (more information can be found

here) and running via a container. In this discussion I will be using the container method since that is more portable for me and easier to stand up and remove from my lab environment. The first thing we need to do is pull the image:

# podman pull ghcr.io/kurokobo/vbmc4vsphere:0.0.4

Trying to pull ghcr.io/kurokobo/vbmc4vsphere:0.0.4...

Getting image source signatures

Copying blob 7a5d07f2fd13 done

Copying blob 25a245937421 done

Copying blob 2606867e5cc9 done

Copying blob 385bb58d08e6 done

Copying blob ab14b629693d done

Copying blob bf5952930446 done

Copying config 789cdc97ba done

Writing manifest to image destination

Storing signatures

789cdc97ba7461f673cc7ffc8395339f38869abb679ebd0703c2837f493062db

With the image pulled we need to start the container with the following syntax below. I should note that the -p option can be specified more then once using different port numbers. Each of the port numbers will then in turn be used for a virtual machine running in VmWare.

# podman run -d --name vbmc4vsphere -p "6801:6801/udp" -v vbmc-volume:/vbmc/.vbmc ghcr.io/kurokobo/vbmc4vsphere:0.0.4

ddf82bfdb7899e9232462ae3e8ea821d327b0db1bc8501c3827644aad9830736

# podman ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ddf82bfdb789 ghcr.io/kurokobo/vbmc4vsphere:0.0.4 --foreground 3 seconds ago Up 3 seconds ago 0.0.0.0:6801->6801/udp vbmc4vsphere

Now that the vbmc4vsphere container is running lets go ahead and get a bash shell within the container:

# podman exec -it vbmc4vsphere /bin/bash

root@ddf82bfdb789:/#

Inside the container we will go ahead and use the vbmc command to add our rheltest virtual machine. For this command to work we need to specify the port that will be listening (should be one of the ports specified with the -p option at container run time), a IPMI username and password, the vcenter username and password and the vcenter hostname or ipaddress:

root@ddf82bfdb789:/# vbmc add rheltest --port 6801 --username admin --password password --viserver 192.168.0.30 --viserver-password vcenterpassword --viserver-username administrator@vsphere.local

root@ddf82bfdb789:/# vbmc list

+----------+--------+---------+------+

| VM name | Status | Address | Port |

+----------+--------+---------+------+

| rheltest | down | :: | 6801 |

+----------+--------+---------+------+

root@ddf82bfdb789:/#

Once the entry is created we need to start it so its listening for incoming IPMI requests:

root@ddf82bfdb789:/# vbmc start rheltest

root@ddf82bfdb789:/# vbmc list

+----------+---------+---------+------+

| VM name | Status | Address | Port |

+----------+---------+---------+------+

| rheltest | running | :: | 6801 |

+----------+---------+---------+------+

root@ddf82bfdb789:/# exit

exit

#

Now lets grab the ipaddress off the host where the virtualbmc-for-vsphere container is running. We need this value when we specify the host in our IPMI command:

# ip addr show dev ens3

2: ens3: <ltBROADCAST,MULTICAST,UP,LOWER_UP>gt mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:b9:97:58 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.10/24 brd 192.168.0.255 scope global noprefixroute ens3

valid_lft forever preferred_lft forever

inet6 fe80::6baa:4a96:db6b:88ee/64 scope link noprefixroute

valid_lft forever preferred_lft forever

Now let test if we can see the power status of our rheltest host with ipmitool. We know in our previous screenshot that it was on. I will also run a ping to show the host is up and reachable.

# ipmitool -I lanplus -U admin -P password -H 192.168.0.10 -p 6801 power status

Chassis Power is on

# ping 192.168.0.226 -c 4

PING 192.168.0.226 (192.168.0.226) 56(84) bytes of data.

64 bytes from 192.168.0.226: icmp_seq=1 ttl=64 time=0.753 ms

64 bytes from 192.168.0.226: icmp_seq=2 ttl=64 time=0.736 ms

64 bytes from 192.168.0.226: icmp_seq=3 ttl=64 time=0.651 ms

64 bytes from 192.168.0.226: icmp_seq=4 ttl=64 time=0.849 ms

--- 192.168.0.226 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3109ms

rtt min/avg/max/mdev = 0.651/0.747/0.849/0.072 ms

So we confirmed the host is up so lets go ahead and power it off:

# ipmitool -I lanplus -U admin -P password -H 192.168.0.10 -p 6801 power off

Chassis Power Control: Down/Off

Now lets check with ipmitool and see if the status is also marked as off and if it responds to a ping:

# ipmitool -I lanplus -U admin -P password -H 192.168.0.10 -p 6801 power status

Chassis Power is off

# ping 192.168.0.226 -c 4 -t 10

PING 192.168.0.226 (192.168.0.226) 56(84) bytes of data.

From 192.168.0.10 icmp_seq=1 Destination Host Unreachable

From 192.168.0.10 icmp_seq=2 Destination Host Unreachable

From 192.168.0.10 icmp_seq=3 Destination Host Unreachable

From 192.168.0.10 icmp_seq=4 Destination Host Unreachable

--- 192.168.0.226 ping statistics ---

4 packets transmitted, 0 received, +4 errors, 100% packet loss, time 3099ms

pipe 4

Looks like the host is off and no longer responding which is what we expected. From the Vcenter console we can see rheltest has also been powered off. I should note that since we are using the VmWare API's under the covers in virtualbmc-for-vsphere the shutdown task also got recorded in Vcenter under recent tasks.

Lets go ahead and power rheltest back on with the ipmitool command:

# ipmitool -I lanplus -U admin -P password -H 192.168.0.10 -p 6801 power on

Chassis Power Control: Up/On

We can again use ipmitool to validate the power status and ping to validate the connectivity:

# ipmitool -I lanplus -U admin -P password -H 192.168.0.10 -p 6801 power status

Chassis Power is on

# ping 192.168.0.226 -c 4

PING 192.168.0.226 (192.168.0.226) 56(84) bytes of data.

64 bytes from 192.168.0.226: icmp_seq=1 ttl=64 time=0.860 ms

64 bytes from 192.168.0.226: icmp_seq=2 ttl=64 time=1.53 ms

64 bytes from 192.168.0.226: icmp_seq=3 ttl=64 time=0.743 ms

64 bytes from 192.168.0.226: icmp_seq=4 ttl=64 time=0.776 ms

--- 192.168.0.226 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3066ms

rtt min/avg/max/mdev = 0.743/0.976/1.528/0.323 ms

Looks like rheltest is back up again and reachable. The Vcenter console also shows that rheltest has been powered on again:

Now that we understand how virtualbmc-for-vsphere works it would be rather easy to configure an OpenShift BareMetal IPI lab inside of VmWare. While I will not go into the details here there are additional blogs I have written around the requirements for doing a baremetal IPI deployment and those should be no different in this scenario now that we have the IPMI requirement met in VmWare.

There is a lot of discussion about using GPUs for AI/ML workloads and while some of those workloads run in containers there are still some use cases where those workloads run in virtual machines. In OpenShift when using Containerized virtualization one can run virtual machines and use a PCI passhthrough configuration to pass up one of the GPUs into the virtual machine. This is clearly defined in the documentation here. However there are some cases where the entire GPU is not needed by the virtual machine and so rather then have wasted cycles we can pass a slice of the GPU into the virtual machine as a vGPU. In this blog I will demonstrate how to configure and pass up a virtual GPU into a virtual Linux machine.

There is a lot of discussion about using GPUs for AI/ML workloads and while some of those workloads run in containers there are still some use cases where those workloads run in virtual machines. In OpenShift when using Containerized virtualization one can run virtual machines and use a PCI passhthrough configuration to pass up one of the GPUs into the virtual machine. This is clearly defined in the documentation here. However there are some cases where the entire GPU is not needed by the virtual machine and so rather then have wasted cycles we can pass a slice of the GPU into the virtual machine as a vGPU. In this blog I will demonstrate how to configure and pass up a virtual GPU into a virtual Linux machine.