In a previous blog I walked through a disconnected single node OpenShift deployment using the OpenShift installer. In this blog I will use a lot of the same steps but instead of installing on an X86 system we will try our hand at installing on a Nvidia Jetson AGX which contains an Arm processor.

Before we begin lets cover what this blog already assumes exists as prerequisites:

- Podman, the oc binary and the openshift-install binary already exist on the system

- A disconnected registry is already configured and has the mirrored aarch64 contents of the images for a given OpenShift release.

- A physical Nvidia Jetson AGX with UEFI firmware and the ability to boot an ISO image from USB

- DNS entries for basic baremetal IPI requirements exist. My environment is below:

master-0.kni7.schmaustech.com IN A 192.168.0.47

*.apps.kni7.schmaustech.com IN A 192.168.0.47

api.kni7.schmaustech.com IN A 192.168.0.47

api-int.kni7.schmaustech.com IN A 192.168.0.47

First lets verify the version of OpenShift we will be deploying by looking at the output of the oc version and openshift-install version:

$ oc version

Client Version: 4.9.0-rc.1

$ ./openshift-install version

./openshift-install 4.9.0-rc.1

built from commit 6b4296b0df51096b4ff03e4ec4aeedeead3425ab

release image quay.io/openshift-release-dev/ocp-release@sha256:2cce76f4dc2400d3c374f76ac0aa4e481579fce293e732f0b27775b7218f2c8d

release architecture amd64

While it looks like we will be deploying a version of 4.9.0-rc.1. We technically will be deploying a version 4.9.0-rc.2 for aarch64. We will set an image override for aarch64/4.9.0-rc2 a little further in our process. Before that though, ensure the disconnected registry being used has the images for 4.9.0-rc.2 mirrored. If not use a procedure like I have used in one of my previous blogs to mirror the 4.9.0-rc.2 images.

Now lets pull down a few files we will need for our deployment iso. We need to pull down both the coreos-installer and the rhcos live iso:

$ wget https://mirror.openshift.com/pub/openshift-v4/clients/coreos-installer/v0.8.0-3/coreos-installer

--2021-09-16 10:10:26-- https://mirror.openshift.com/pub/openshift-v4/clients/coreos-installer/v0.8.0-3/coreos-installer

Resolving mirror.openshift.com (mirror.openshift.com)... 54.172.173.155, 54.173.18.88

Connecting to mirror.openshift.com (mirror.openshift.com)|54.172.173.155|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 7649968 (7.3M)

Saving to: ‘coreos-installer’

coreos-installer 100%[=====================================================================================================================>] 7.29M 8.83MB/s in 0.8s

2021-09-16 10:10:27 (8.83 MB/s) - ‘coreos-installer’ saved [7649968/7649968]

$ wget https://mirror.openshift.com/pub/openshift-v4/aarch64/dependencies/rhcos/pre-release/4.9.0-rc.2/rhcos-live.aarch64.iso

--2021-09-16 10:10:40-- https://mirror.openshift.com/pub/openshift-v4/aarch64/dependencies/rhcos/pre-release/4.9.0-rc.2/rhcos-live.aarch64.iso

Resolving mirror.openshift.com (mirror.openshift.com)... 54.172.173.155, 54.173.18.88

Connecting to mirror.openshift.com (mirror.openshift.com)|54.172.173.155|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 1031798784 (984M) [application/octet-stream]

Saving to: ‘rhcos-live.aarch64.iso’

rhcos-live.aarch64.iso 100%[=====================================================================================================================>] 984.00M 11.2MB/s in 93s

2021-09-16 10:12:13 (10.6 MB/s) - ‘rhcos-live.aarch64.iso’ saved [1031798784/1031798784]

Set the execution bit on the coreos-installer which is a utility to embed the ignition file we will generate:

$ chmod 755 coreos-installer

Lets go ahead now and create an install-config.yaml for our single node deployment. Notice some of the differences in this install-config.yaml. Specifically we have no worker nodes defined, one master node defined and then we have the BootstrapInPlace section which tells us to use the nvme0n1 device in the node. We also have our imageContentSources which tells the installer to use the local registry mirror I have already preconfigured.

$ cat << EOF > install-config.yaml

apiVersion: v1beta4

baseDomain: schmaustech.com

metadata:

name: kni7

networking:

networkType: OpenShiftSDN

machineCIDR: 192.168.0.0/24

compute:

- name: worker

replicas: 0

controlPlane:

name: master

replicas: 1

platform:

none: {}

BootstrapInPlace:

InstallationDisk: /dev/nvme0n1

pullSecret: '{ "auths": { "rhel8-ocp-auto.schmaustech.com:5000": {"auth": "ZHVtbXk6ZHVtbXk=","email": "bschmaus@schmaustech.com" } } }'

sshKey: 'ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDP+5QkRCiuhsYItXj7DzLcOIs2RbCgpMzDtPlt/hfLnDkLGozYIFapMp+o4l+6ornbZ3L+hYE0T8SyvyYVWfm1XpPcVgUIW6qp7yfEyTSRhpGnoY74PD33FIf6BtU2HoFLWjQcE6OrQOF0wijI3fgL0jSzvAxvYoXU/huMx/kI2jBcWEq5cADRfvpeYXhVEJLrIIOepoAZE1syaPT7jQEoLDfvxrDZPKObCOI2vzLiAQXI7gK1uc9YDb6IEA/4Ik4eV2R1+VCgKhgk5RUqn69+8a1o783g1tChKuLwA4K9lyEAbFBwlHMctfNOLeC1w+bYpDXH/3GydcYfq79/18dVd+xEUlzzC+2/qycWG36C1MxUZa2fXvSRWLnpkLcxtIes4MikFeIr3jkJlFUzITigzvFrKa2IKaJzQ53WsE++LVnKJfcFNLtWfdEOZMowG/KtgzSSac/iVEJRM2YTIJsQsqhhI4PTrqVlUy/NwcXOFfUF/NkF2deeUZ21Cdn+bKZDKtFu2x+ujyAWZKNq570YaFT3a4TrL6WmE9kdHnJOXYR61Tiq/1fU+y0fv1d0f1cYr4+mNRCGIZoQOgJraF7/YluLB23INkJgtbah/0t1xzSsQ59gzFhRlLkW9gQDekj2tOGJmZIuYCnTXGiqXHnri2yAPexgRiaFjoM3GCpsWw== bschmaus@bschmaus.remote.csb'

imageContentSources:

- mirrors:

- rhel8-ocp-auto.schmaustech.com:5000/ocp4/openshift4

source: quay.io/openshift-release-dev/ocp-release

- mirrors:

- rhel8-ocp-auto.schmaustech.com:5000/ocp4/openshift4

source: quay.io/openshift-release-dev/ocp-v4.0-art-dev

additionalTrustBundle: |

-----BEGIN CERTIFICATE-----

MIIF7zCCA9egAwIBAgIUeecEs+U5psgJ0aFgc4Q5dGVrAFcwDQYJKoZIhvcNAQEL

BQAwgYYxCzAJBgNVBAYTAlVTMRYwFAYDVQQIDA1Ob3J0aENhcm9saW5hMRAwDgYD

VQQHDAdSYWxlaWdoMRAwDgYDVQQKDAdSZWQgSGF0MRIwEAYDVQQLDAlNYXJrZXRp

bmcxJzAlBgNVBAMMHnJoZWw4LW9jcC1hdXRvLnNjaG1hdXN0ZWNoLmNvbTAeFw0y

MTA2MDkxMDM5MDZaFw0yMjA2MDkxMDM5MDZaMIGGMQswCQYDVQQGEwJVUzEWMBQG

A1UECAwNTm9ydGhDYXJvbGluYTEQMA4GA1UEBwwHUmFsZWlnaDEQMA4GA1UECgwH

UmVkIEhhdDESMBAGA1UECwwJTWFya2V0aW5nMScwJQYDVQQDDB5yaGVsOC1vY3At

YXV0by5zY2htYXVzdGVjaC5jb20wggIiMA0GCSqGSIb3DQEBAQUAA4ICDwAwggIK

AoICAQC9exAg3Ie3N3mkrQKseyri1VP2IPTc+pUEiVCPisIQAhRUfHhPR1HT7EF7

SwaxrWjpfh9aYBPDEF3uLFQvzDEJWCh5PF55jwn3aABFGKEhfVBKd+es6nXnYaCS

8CgLS2qM9x4WiuZxrntfB16JrjP+CrTvlAbE4DIMlDQLgh8+hDw9VPlbzY+MI+WC

cYues1Ne+JZ5dZcKmCZ3zrVToPjreWZUuhSygci2xIQZxwWNmTvAgi+CAiQZS7VF

RmKjj2H/o/d3I+XSS2261I8aXCAw4/3vaM9aci0eHeEhLIMrhv86WycOjcYL1Z6R

n55diwDTSyrTo/B4zsQbmYUc8rP+pR2fyRJEGFVJ4ejcj2ZF5EbgUKupyU2gh/qt

QeYtJ+6uAr9S5iQIcq9qvD9nhAtm3DnBb065X4jVPl2YL4zsbOS1gjoa6dRbFuvu

f3SdsbQRF/YJWY/7j6cUaueCQOlXZRNhbQQHdIdBWFObw0QyyYtI831ue1MHPG0C

nsAriPOkRzBBq+BPmS9CqcRDGqh+nd9m9UPVDoBshwaziSqaIK2hvfCAVb3BPJES

CXKuIaP2IRzTjse58aAzsRW3W+4e/v9fwAOaE8nS7i+v8wrqcFgJ489HnVq+kRNc

VImv5dBKg2frzXs1PpnWkE4u2VJagKn9B2zva2miRQ+LyvLLDwIDAQABo1MwUTAd

BgNVHQ4EFgQUbcE9mpTkOK2ypIrURf+xYR08OAAwHwYDVR0jBBgwFoAUbcE9mpTk

OK2ypIrURf+xYR08OAAwDwYDVR0TAQH/BAUwAwEB/zANBgkqhkiG9w0BAQsFAAOC

AgEANTjx04NoiIyw9DyvszwRdrSGPO3dy1gk3jh+Du6Dpqqku3Mwr2ktaSCimeZS

4zY4S5mRCgZRwDKu19z0tMwbVDyzHPFJx+wqBpZKkD1FvOPKjKLewtiW2z8AP/kF

gl5UUNuwvGhOizazbvd1faQ8jMYoZKifM8On6IpFgqXCx98/GOWvnjn2t8YkMN3x

blKVm5N7eGy9LeiGRoiCJqcyfGqdAdg+Z+J94AHEZb3OxG8uHLrtmz0BF3A+8V2H

hofYI0spx5y9OcPin2yLm9DeCwWAA7maqdImBG/QpQCjcPW3Yzz9VytIMajPdnvd

vbJF5GZNj7ods1AykCCJjGy6n9WCf3a4VLnZWtUTbtz0nrIjJjsdlXZqby5BCF0G

iqWbg0j8onl6kmbMAhssRTlvL8w90F1IK3Hk+lz0Qy8rqZX2ohObtEYGMIAOdFJ1

iPQrbksXOBpZNtm1VAved41sYt1txS2WZQgfklIXOhNOu4r32ZGKas4EJml0l0wL

2P65PkPEa7AOeqwP0y6eGoNG9qFSl+yArycZGWudp88977H6CcdkdEcQzmjg5+TD

9GHm3drUYGqBJDvIemQaXfnwy9Gxx+oBDpXLXOuo+edK812zh/q7s2FELfH5ZieE

Q3dIH8UGsnjYxv8G3O23cYKZ1U0iiu9QvPRFm0F8JuFZqLQ=

-----END CERTIFICATE-----

EOF

Before we can create the ignition file from the install-config.yaml we need to set the image release override variable. We do this because all of this work is currently done on a X86 host but we are trying to generate a ignition file for an aarch64 host. To set the image release override we will simply curl the aarch64 4.9.0-rc.2 release text and grab the quay release line:

$ export OPENSHIFT_INSTALL_RELEASE_IMAGE_OVERRIDE=$(curl -s https://mirror.openshift.com/pub/openshift-v4/aarch64/clients/ocp/4.9.0-rc.2/release.txt| grep 'Pull From: quay.io' | awk -F ' ' '{print $3}' | xargs)

$ echo $OPENSHIFT_INSTALL_RELEASE_IMAGE_OVERRIDE

quay.io/openshift-release-dev/ocp-release@sha256:edd47e590c6320b158a6a4894ca804618d3b1e774988c89cd988e8a841cb5f3c

Once we have the install-config.yaml and the image release override variable set we can use the openshift-install binary to generate a singe node openshift ignition config:

$ ./openshift-install --dir=./ create single-node-ignition-config

INFO Consuming Install Config from target directory

WARNING Making control-plane schedulable by setting MastersSchedulable to true for Scheduler cluster settings

WARNING Found override for release image. Please be warned, this is not advised

INFO Single-Node-Ignition-Config created in: . and auth

$ ls -lart

total 1017468

-rwxr-xr-x. 1 bschmaus bschmaus 7649968 Apr 27 00:49 coreos-installer

-rw-rw-r--. 1 bschmaus bschmaus 1031798784 Jul 22 13:10 rhcos-live.aarch64.iso

-rw-r--r--. 1 bschmaus bschmaus 3667 Sep 15 10:35 install-config.yaml.save

drwx------. 27 bschmaus bschmaus 8192 Sep 15 10:39 ..

drwxr-x---. 2 bschmaus bschmaus 50 Sep 15 10:45 auth

-rw-r-----. 1 bschmaus bschmaus 284253 Sep 15 10:45 bootstrap-in-place-for-live-iso.ign

-rw-r-----. 1 bschmaus bschmaus 1865601 Sep 15 10:45 .openshift_install_state.json

-rw-rw-r--. 1 bschmaus bschmaus 213442 Sep 15 10:45 .openshift_install.log

-rw-r-----. 1 bschmaus bschmaus 98 Sep 15 10:45 metadata.json

drwxrwxr-x. 3 bschmaus bschmaus 247 Sep 15 10:45 .

Now lets take that bootstrap-in-place-for-live-iso.ign config we generated and use the coreos-installer to embed it into the rhcos live iso image. There will be no output upon completion so I usually echo the $? to confirm it ended with a good exit status.

$ ./coreos-installer iso ignition embed -fi bootstrap-in-place-for-live-iso.ign rhcos-live.aarch64.iso

$ echo $?

0

Now that the rhcos live iso image has the ignition file embedded we can write the image to a USB device:

$ sudo dd if=./rhcos-live.aarch64.iso of=/dev/sda bs=8M status=progress oflag=direct

[sudo] password for bschmaus:

948783104 bytes (949 MB, 905 MiB) copied, 216 s, 4.4 MB/s

113+1 records in

113+1 records out

948783104 bytes (949 MB, 905 MiB) copied, 215.922 s, 4.4 MB/s

Once the USB device is written take the USB and connect it to the Nvidia Jetson AGX and boot from it. Keep in mind during the first boot of the Jetson I had to hit the ESC key to get access to the device manager to tell it to boot from the ISO. Then once the system reboots again I had to go back into the device manager to boot from my NVMe device. After that the system will boot from the NMVe until the next time I want to install from the ISO again. This is more a Jetson nuance then OCP issue.

Once the system has rebooted the first time and if the ignition file was embedded without errors we should be able to login using the core user and associated key that was set in the install-config.yaml we used. Once inside the node we should be able to use crictl ps to confirm containers are being started:

$ ssh core@192.168.0.47

Red Hat Enterprise Linux CoreOS 49.84.202109152147-0

Part of OpenShift 4.9, RHCOS is a Kubernetes native operating system

managed by the Machine Config Operator (`clusteroperator/machine-config`).

WARNING: Direct SSH access to machines is not recommended; instead,

make configuration changes via `machineconfig` objects:

https://docs.openshift.com/container-platform/4.9/architecture/architecture-rhcos.html

---

Last login: Fri Sep 17 20:26:28 2021 from 10.0.0.152

[core@master-0 ~]$ sudo crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

f022aab7d2bd2 4e462838cdd7a580f875714d898aa392db63aefa2201141eca41c49d976f0965 3 seconds ago Running network-operator 0 b89d47c2e53c9

c65fe3bd5a27c quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:8ad7f6aa04f25db941d5364fe2826cc0ed8c78b0f6ecba2cff660fab2b9327c7 About a minute ago Running cluster-policy-controller 0 0df63b1ad8da3

7c5ea2f9f3ce0 quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:efec74e2c00bca3688268eca7a256d865935c73b0ad0da4d5a9ceb126411ee1e About a minute ago Running kube-apiserver-insecure-readyz 0 f19feea00d442

c8665a708e33c 055d6dcd87c13fc04afd196253127c33cd86e4e0202e6798ce5b7136de56b206 About a minute ago Running kube-apiserver 0 f19feea00d442

af8c8be71a74f quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:0d496e5f28b2d9f9bb507eb6b2a0544e46f973720bc98511bf4d05e9c81dc07a About a minute ago Running kube-controller-manager 0 0df63b1ad8da3

5c7fc277712f9 quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:0d496e5f28b2d9f9bb507eb6b2a0544e46f973720bc98511bf4d05e9c81dc07a About a minute ago Running kube-scheduler 0 41d530f654838

98b0faec9e0cd quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:81262ae10274989475617ac492361c3bc8853304fb409057e75d94c3eba18e48 About a minute ago Running etcd 0 f553fa481d714

[core@master-0 ~]$ uname -a

Linux master-0.kni7.schmaustech.com 4.18.0-305.19.1.el8_4.aarch64 #1 SMP Mon Aug 30 07:17:58 EDT 2021 aarch64 aarch64 aarch64 GNU/Linux

Further once we have confirmed containers are starting we can also use the kubeconfig and show the node state:

$ export KUBECONFIG=~/ocp/auth/kubeconfig

$ ./oc get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master-0.kni7.schmaustech.com Ready master,worker 12m v1.22.0-rc.0+75ee307 192.168.0.47 <none> Red Hat Enterprise Linux CoreOS 49.84.202109152147-0 (Ootpa) 4.18.0-305.19.1.el8_4.aarch64 cri-o://1.22.0-71.rhaos4.9.gitd54f8e1.el8

Now we can get the cluster operator states with the oc command to confirm when installation has completed. If there are still False's under AVAILABLE then the installation is still progressing:

$ ./oc get co

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

authentication 4.9.0-rc.2 False False True 12m OAuthServerRouteEndpointAccessibleControllerAvailable: route.route.openshift.io "oauth-openshift" not found...

baremetal 4.9.0-rc.2 True False False 34s

cloud-controller-manager 4.9.0-rc.2 True False False 31s

cloud-credential 4.9.0-rc.2 True False False 11m

cluster-autoscaler

config-operator 4.9.0-rc.2 True False False 12m

console 4.9.0-rc.2 Unknown False False 8s

csi-snapshot-controller 4.9.0-rc.2 True False False 12m

dns 4.9.0-rc.2 True False False 109s

etcd 4.9.0-rc.2 True False False 6m20s

image-registry

ingress Unknown True Unknown 15s Not all ingress controllers are available.

insights 4.9.0-rc.2 True True False 32s Initializing the operator

kube-apiserver 4.9.0-rc.2 True False False 96s

kube-controller-manager 4.9.0-rc.2 True False False 5m3s

kube-scheduler 4.9.0-rc.2 True False False 6m14s

kube-storage-version-migrator 4.9.0-rc.2 True False False 12m

machine-api 4.9.0-rc.2 True False False 1s

machine-approver 4.9.0-rc.2 True False False 55s

machine-config 4.9.0-rc.2 True False False 38s

marketplace 4.9.0-rc.2 True False False 11m

monitoring Unknown True Unknown 12m Rolling out the stack.

network 4.9.0-rc.2 True False False 13m

node-tuning 4.9.0-rc.2 True False False 11s

openshift-apiserver 4.9.0-rc.2 False False False 105s APIServerDeploymentAvailable: no apiserver.openshift-apiserver pods available on any node....

openshift-controller-manager 4.9.0-rc.2 True False False 75s

openshift-samples

operator-lifecycle-manager 4.9.0-rc.2 True False False 24s

operator-lifecycle-manager-catalog 4.9.0-rc.2 True True False 21s Deployed 0.18.3

operator-lifecycle-manager-packageserver False True False 26s

service-ca 4.9.0-rc.2 True False False 12m

storage 4.9.0-rc.2 True False False 30s

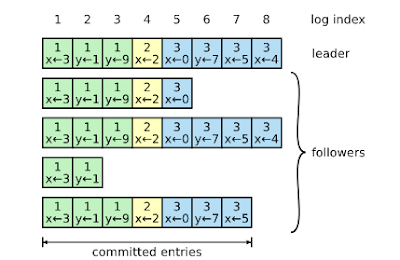

Finally though after about 30 - 60 minutes we can finally see our single node cluster has completed installation:

$ ./oc get co

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

authentication 4.9.0-rc.2 True False False 5m3s

baremetal 4.9.0-rc.2 True False False 8m24s

cloud-controller-manager 4.9.0-rc.2 True False False 8m21s

cloud-credential 4.9.0-rc.2 True False False 19m

cluster-autoscaler 4.9.0-rc.2 True False False 7m35s

config-operator 4.9.0-rc.2 True False False 20m

console 4.9.0-rc.2 True False False 4m54s

csi-snapshot-controller 4.9.0-rc.2 True False False 19m

dns 4.9.0-rc.2 True False False 9m39s

etcd 4.9.0-rc.2 True False False 14m

image-registry 4.9.0-rc.2 True False False 4m52s

ingress 4.9.0-rc.2 True False False 7m4s

insights 4.9.0-rc.2 True False False 8m22s

kube-apiserver 4.9.0-rc.2 True False False 9m26s

kube-controller-manager 4.9.0-rc.2 True False False 12m

kube-scheduler 4.9.0-rc.2 True False False 14m

kube-storage-version-migrator 4.9.0-rc.2 True False False 20m

machine-api 4.9.0-rc.2 True False False 7m51s

machine-approver 4.9.0-rc.2 True False False 8m45s

machine-config 4.9.0-rc.2 True False False 8m28s

marketplace 4.9.0-rc.2 True False False 19m

monitoring 4.9.0-rc.2 True False False 2m24s

network 4.9.0-rc.2 True False False 21m

node-tuning 4.9.0-rc.2 True False False 8m1s

openshift-apiserver 4.9.0-rc.2 True False False 5m9s

openshift-controller-manager 4.9.0-rc.2 True False False 9m5s

openshift-samples 4.9.0-rc.2 True False False 6m57s

operator-lifecycle-manager 4.9.0-rc.2 True False False 8m14s

operator-lifecycle-manager-catalog 4.9.0-rc.2 True False False 8m11s

operator-lifecycle-manager-packageserver 4.9.0-rc.2 True False False 7m49s

service-ca 4.9.0-rc.2 True False False 20m

storage 4.9.0-rc.2 True False False 8m20s

And from the web console: